The leap from standalone Large Language Models to autonomous agents is more than a technical upgrade; it is a shift from generating text to choreographing behavior. In the early stages of development, a model is often treated like a chat partner, answering questions in a simple request-response loop. But as these models evolve into agents, they become reasoning engines driving a much larger machine.

The true test comes when leaving the safety of a demo for the unpredictability of the real world. In a laboratory setting, a model’s occasional mistake is a curiosity, but in production, that same inconsistency becomes a liability. Success in this field depends on building the structural scaffolding, the guardrails and memory, that turns a fickle, probabilistic model into a reliable, goal-oriented worker. This blog covers the essential patterns needed to bridge that gap: orchestration, memory management, and the collaboration between multiple agents.

The Agentic Control Loop

At the heart of any sophisticated Agentic AI architecture lies the cognitive control loop. Unlike a standard Retrieval-Augmented Generation (RAG) pipeline, which is typically a Directed Acyclic Graph (DAG) with a clear start and end, an agent implies a cycle.

The fundamental architecture acts as a state machine that executes a continuous loop of Perception → Reasoning → Action → Observation.

- Perception: The agent ingests user input and the current environmental state.

- Reasoning: The LLM analyzes the state, consulting its instructions to select the next necessary step (a “Thought”).

- Action: The system executes a tool, including an API call, a database query, or a Python script.

- Observation: The output of the tool is fed back into the agent’s context window, updating its state.

The loop continues until the LLM generates a “stop” condition indicating goal completion. Implementing this reliably requires an Agentic AI framework capable of maintaining persistent state objects across differing compute environments.

Pillar 1: Agentic AI Orchestration

While the LLM provides raw intelligence, agentic ai orchestration provides executive function. Orchestration defines the logic governing the decision-making process within the loop. Without structured orchestration, agents frequently suffer from recursive errors or context drift where the original objective is lost.

Specific patterns function to constrain and guide the LLM’s reasoning capabilities.

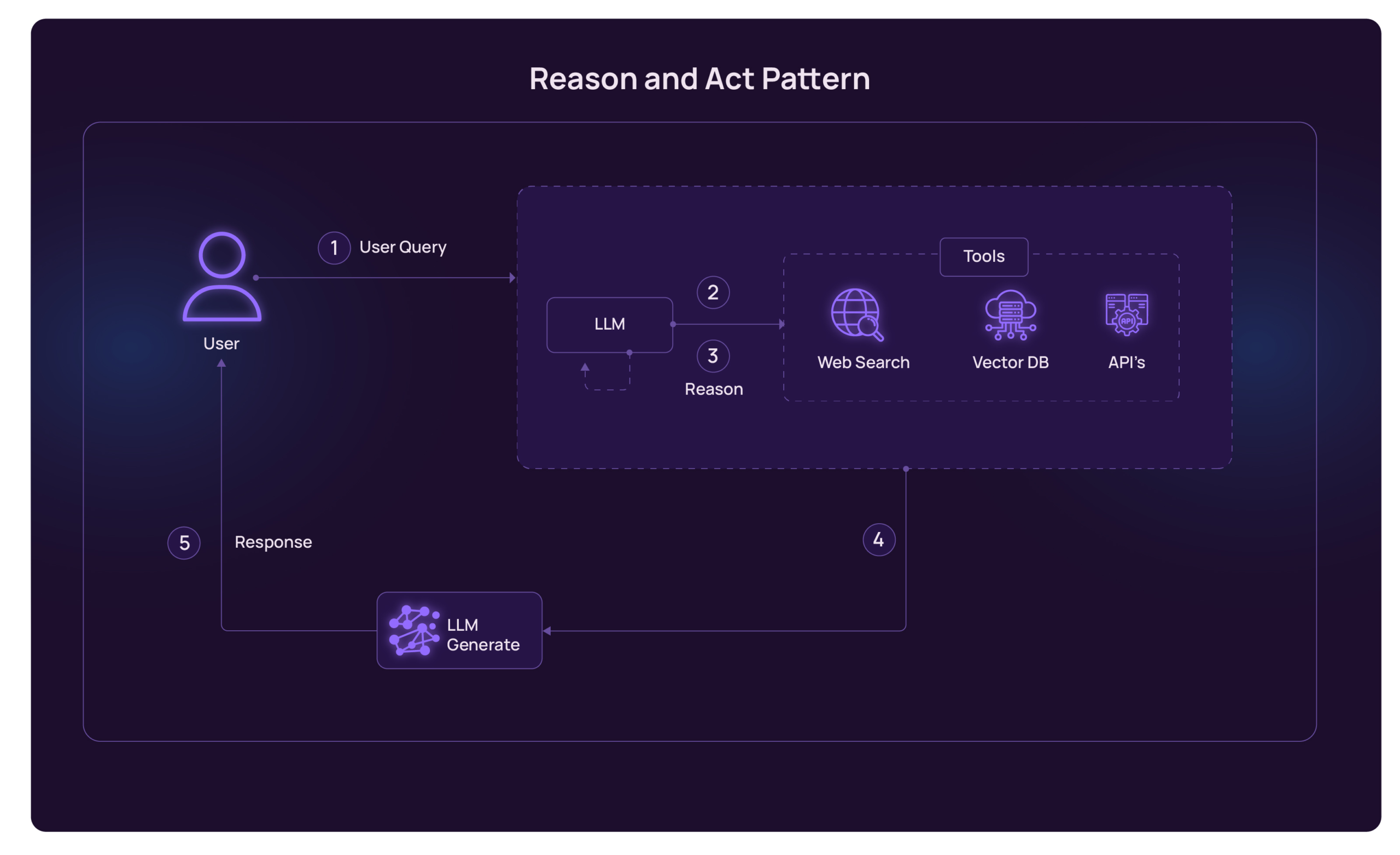

The ReAct Pattern (Reason + Act)

The foundational pattern for general-purpose agents is ReAct. It addresses the issue where models hallucinate tool actions or outputs. By separating reasoning tokens from tool invocation, ReAct improves tool-use reliability and planning discipline. In production systems, this reasoning is often internal or partially structured rather than exposed as verbose Chain-of-Thought.

Robust Agentic AI architecture implements ReAct with a “scratchpad” mechanism. The scratchpad serves as a designated area of the prompt context that accumulates the history of thoughts and observations for the current task. Management of this scratchpad is critical. If an agent fails a tool call, dumping a massive raw error log into the scratchpad often confuses the model. Effective orchestration involves summarizing observations before feeding them back into the loop to maintain context purity.

Planning and Reflexion Patterns

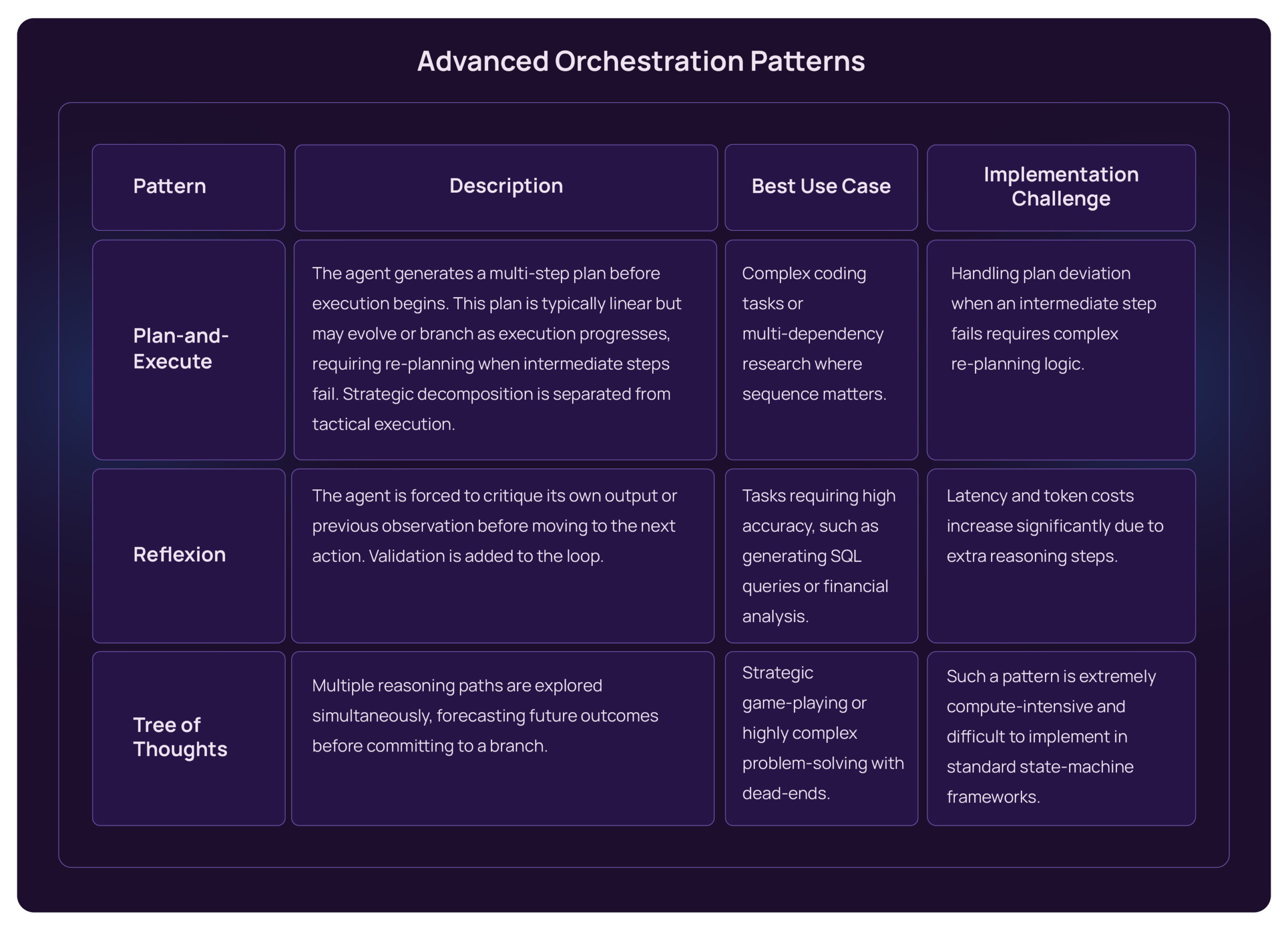

For complex, multi-step tasks, a simple reactive loop proves inefficient as the agent effectively feels its way through the dark. Advanced patterns separate planning from execution and introduce self-correction.

Implementing these patterns often requires specialized AI agent development frameworks like LangGraph or LlamaIndex. These libraries treat agent workflows as traversable graphs rather than linear chains.

Pillar 2: Memory Architecture

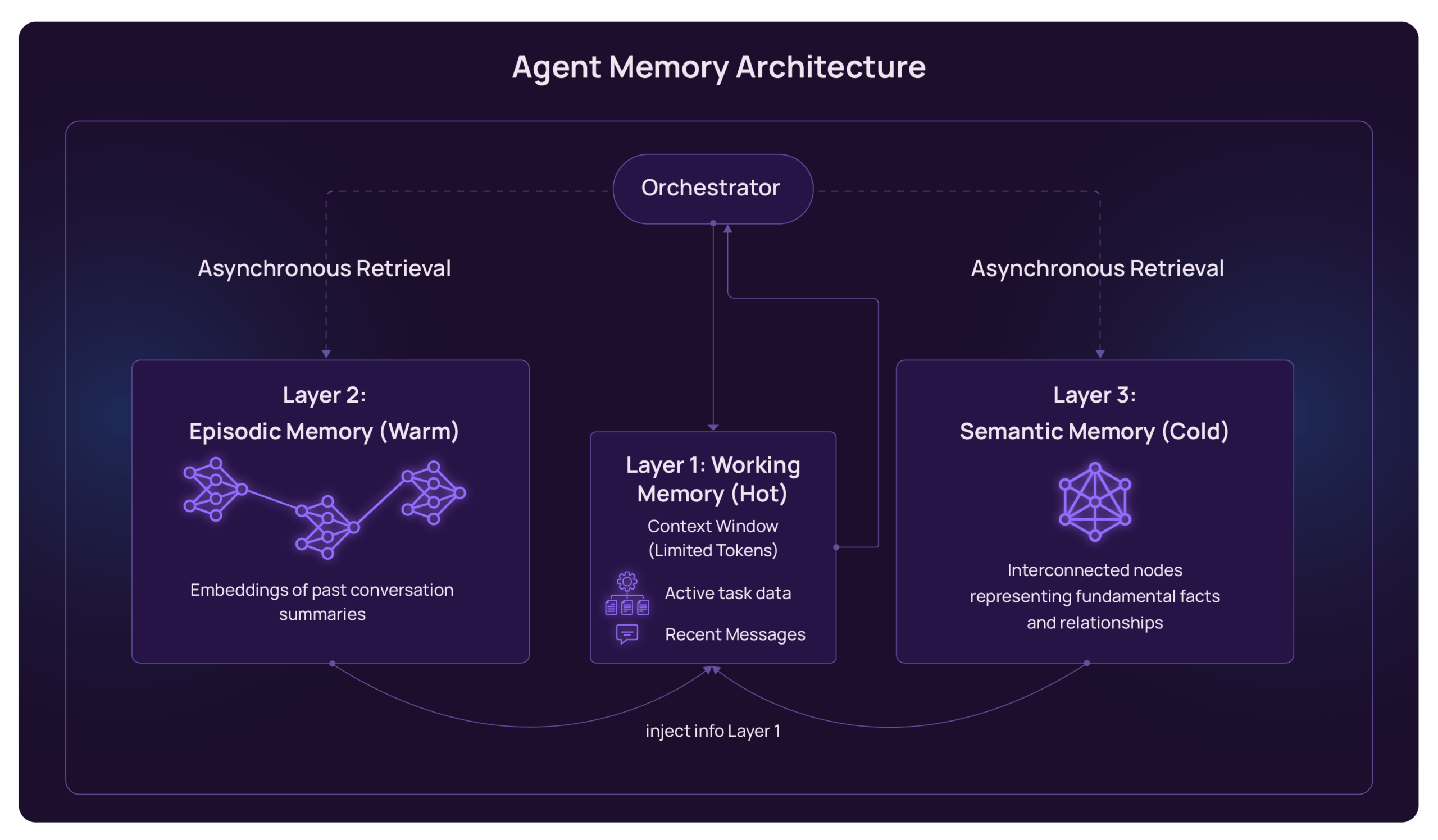

Memory distinguishes a transient chatbot from a persistent agent. A chatbot exists in the immediate moment, whereas an agent must maintain continuity over time to achieve long-horizon goals. In high-level Agentic AI architecture, memory is not a monolith but a distributed system comprising short-term context and long-term retrieval layers.

Short-Term Memory

The immediate constraint in AI agent development is the model’s token limit. Short-term memory acts as the sliding window of the current session.

Naive approaches push entire chat histories into the prompt. Such a strategy degrades performance as “distractor” tokens dilute the model’s attention. Production-grade patterns employ conversation summarization with entity extraction. Instead of raw message logs, the system maintains a structured profile object containing current goals, identified entities, and recent tool outputs.

Long-Term and Semantic Memory

Episodic memory allows the agent to recall past experiences across different sessions. While standard Vector Databases (RAG) are used for retrieving documents, vector retrieval alone optimizes for semantic similarity rather than relational understanding. In domains where dependencies and system relationships matter, this can limit reasoning fidelity.

As a result, some advanced Agentic AI architectures experiment with GraphRAG approaches, where extracted entities and relationships are stored in a knowledge graph. Graph-based memory can enhance reasoning over structured domains, though it introduces additional complexity and does not universally outperform traditional RAG.

Pillar 3: Multi-Agent Systems (MAS)

A single agent is a generalist. As task complexity grows, the generalist’s prompt becomes overloaded with tool definitions and instructions, leading to confusion. The solution in scalable Agentic AI architecture is a Multi-Agent System.

In MAS, agents are treated similarly to microservices. Each agent possesses a specialized persona, a restricted toolset, and a specific domain focus. This shifts the core engineering challenge from prompt design to protocol design—defining how agents communicate, validate, and arbitrate results.

The Hierarchical Supervisor Pattern

The Hierarchical Supervisor is a highly stable pattern for enterprise AI agent development as it models a strict command structure.

- The Supervisor: A routing agent with no external tools. The sole function is to analyze the incoming request and delegate control to the appropriate worker.

- The Workers: Specialized agents (e.g., a “Coder,” a “Web Researcher,” a “Data Analyst”).

- The Handoff: The Supervisor transfer’s control. The worker executes the internal loop. Upon completion, a structured “handoff” message is returned to the Supervisor with results.

Such isolation ensures the “Coder” agent does not need knowledge of web search tools, keeping the context window clean and execution accurate.

Collaborative Debate and Consensus

For workflows requiring creativity or rigorous vetting, a strict hierarchy proves too rigid. Collaborative patterns utilize multiple agents to critique one another.

In a “Multi-Persona Prompting” setup, one agent might generate a software solution, while a second agent, prompted as a “Security Auditor,” reviews the output for vulnerabilities. The orchestration layer manages such an adversarial loop, only finalizing the output when consensus is reached. Such a pattern significantly reduces error rates in high-stakes tasks.

Implementation: The Ecosystem of Frameworks

Implementing these patterns from scratch involves significant boilerplate code, including retry logic, HTTP handling, and parsing broken JSON. Selection of the correct Agentic AI framework is critical.

LangChain and LangGraph

LangGraph has become a widely adopted framework for agent development by modeling workflows explicitly as graphs. Nodes (agents/tools) and edges (logic) are defined, aligning with the state machine nature of agents. It allows for checkpointing and execution replay (“time travel”), enabling failed actions to be retried from a prior state.

Microsoft Semantic Kernel

Semantic Kernel takes a function-first approach. It excels in agentic ai orchestration where agents must be embedded deeply into existing .NET or Python enterprise applications. Skills (tools) are treated as native code functions, making integration with legacy systems smoother.

AutoGPT and CrewAI

These frameworks abstract much of the complexity, offering pre-built “teams” of agents. While excellent for rapid prototyping, such tools often lack the granular control required for production AI agent development where defining exactly how the Supervisor handles a specific edge case is necessary.

Failure Modes and Production Hardening

No discussion on architecture is complete without addressing failure. In deterministic software, bugs are fixed. In probabilistic AI, variance is managed. In production systems, failure mitigation must also account for security, access control, and side-effect control when tools interact with real-world systems.

- Tool Divergence: The LLM might try to call a tool that does not exist or hallucinate parameters. The design pattern here is Schema Enforcement. The LLM is prevented from generating raw text for tools. Instead, structured objects (like Pydantic models in Python) are enforced and validated before execution.

- Infinite Loops: An agent failing to solve a problem may retry forever. Step Budgets are implemented to mitigate this. The orchestration layer tracks the number of steps or “hops” in a graph. If the count exceeds a threshold, the loop is hard-killed, and a “Human-in-the-Loop” exception is raised.

- Context Overflow: Even with summarization, conversations grow. Token Monitoring middleware automatically triggers a summarization or truncation event when the context hits a percentage of the model’s limit.

Strategic Outlook

The technology sector is moving past the novelty phase of generative AI into the integration phase. Building systems that provide autonomous value requires stopping the treatment of LLMs as magic boxes and starting the treatment of them as components in a distributed system.

Agentic Design Patterns such as reactive loops, hierarchical supervision, and graph-based memory provide the necessary blueprint. A successful Agentic AI architecture is defined not just by the intelligence of the model used, but by the robustness of the control structures surrounding it. Mastering agentic ai orchestration and multi-agent coordination allows engineering teams to build software that actively works to achieve complex goals.

Ready to build production-grade agentic systems? AppsTek Corp specializes in architecting and implementing enterprise agentic AI solutions. We help organizations navigate orchestration patterns, memory design, multi-agent coordination, and operational concerns. Contact AppsTek Corp to discuss production deployment strategies and discover how proven architectural patterns accelerate reliable agentic AI implementation.

About The Author

Wanpherlin M. Shangpliang is a Marketing Manager at AppsTek Corp, driving strategic marketing initiatives across digital, content, and brand communications. She focuses on positioning AppsTek’s AI offerings and comprehensive digital engineering services while supporting market outreach across key industries. With expertise in campaign management, content strategy, and audience engagement, Wanpherlin builds effective marketing programs that drive measurable growth and strengthen AppsTek’s overall presence.